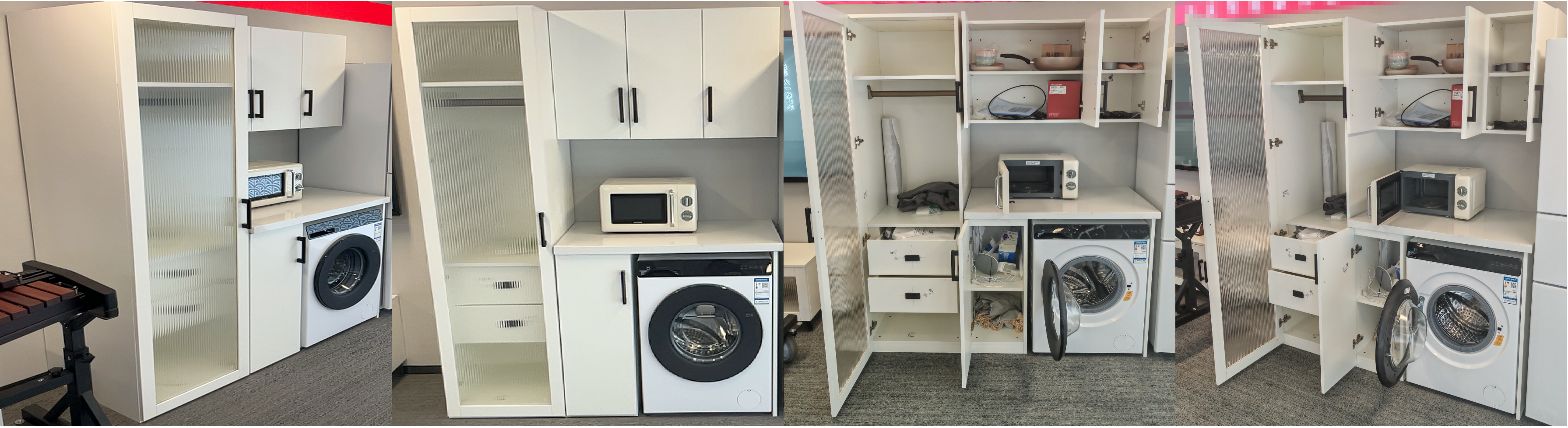

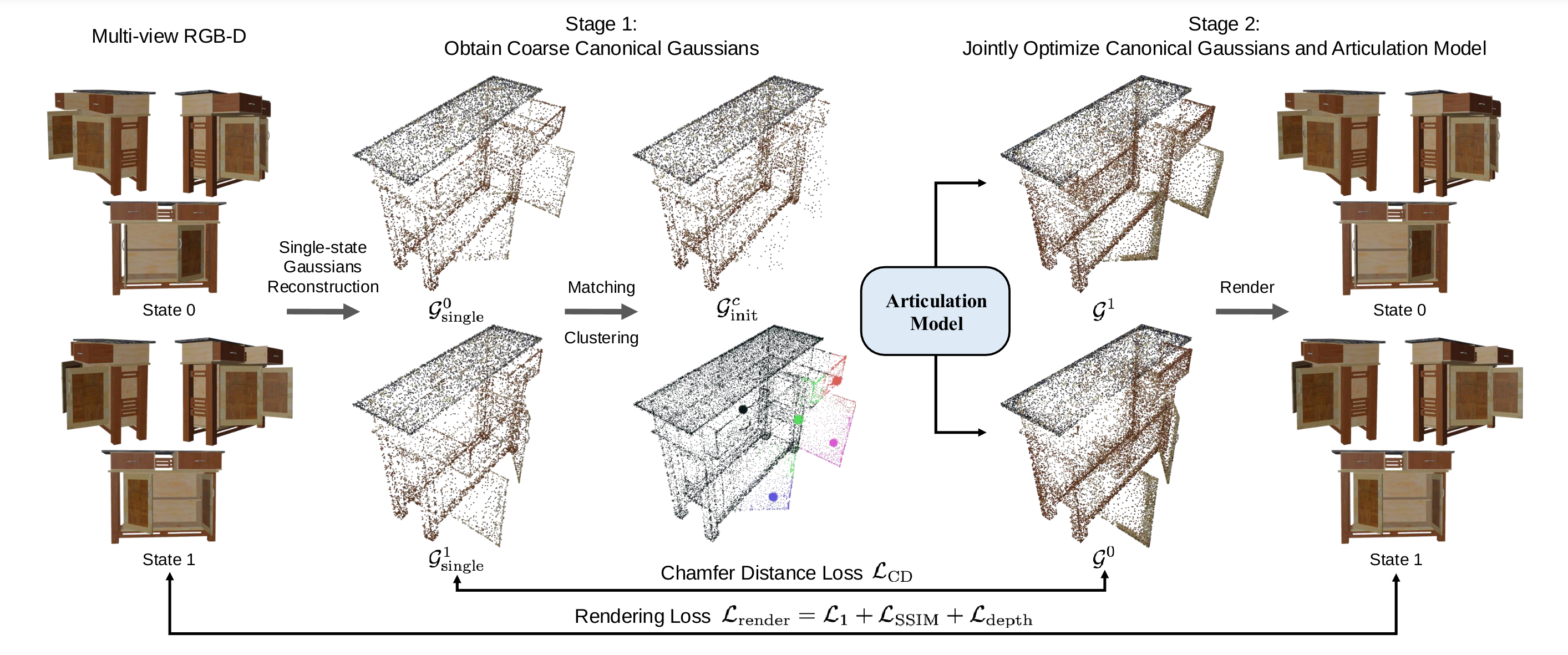

Method Overview

The overview of ArtGS. Our method is divided into two stages: (i) obtaining coarse canonical Gaussians \(G^c_{\text{init}}\) by matching the Gaussians \(G^0_{\text{single}}\) and \(G^1_{\text{single}}\) trained with each single-state individually and initializing the part assignment module with clustered centers, (ii) jointly optimizing canonical Gaussians \(G^c\) and the articulation model (including the articulation parameters \(\Phi\) and the part assignment module).